Reading Prices in Grocery Stores using AI

As part of a personal project I needed to autonomously read the contents of pricetags in grocery stores. The exact layout and contents of these vary by store, but they are generally epaper tags with at least the price, product name and some product number.

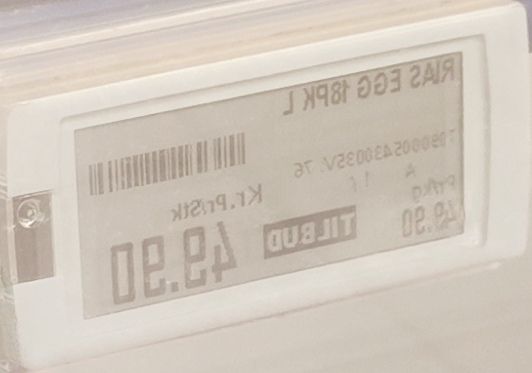

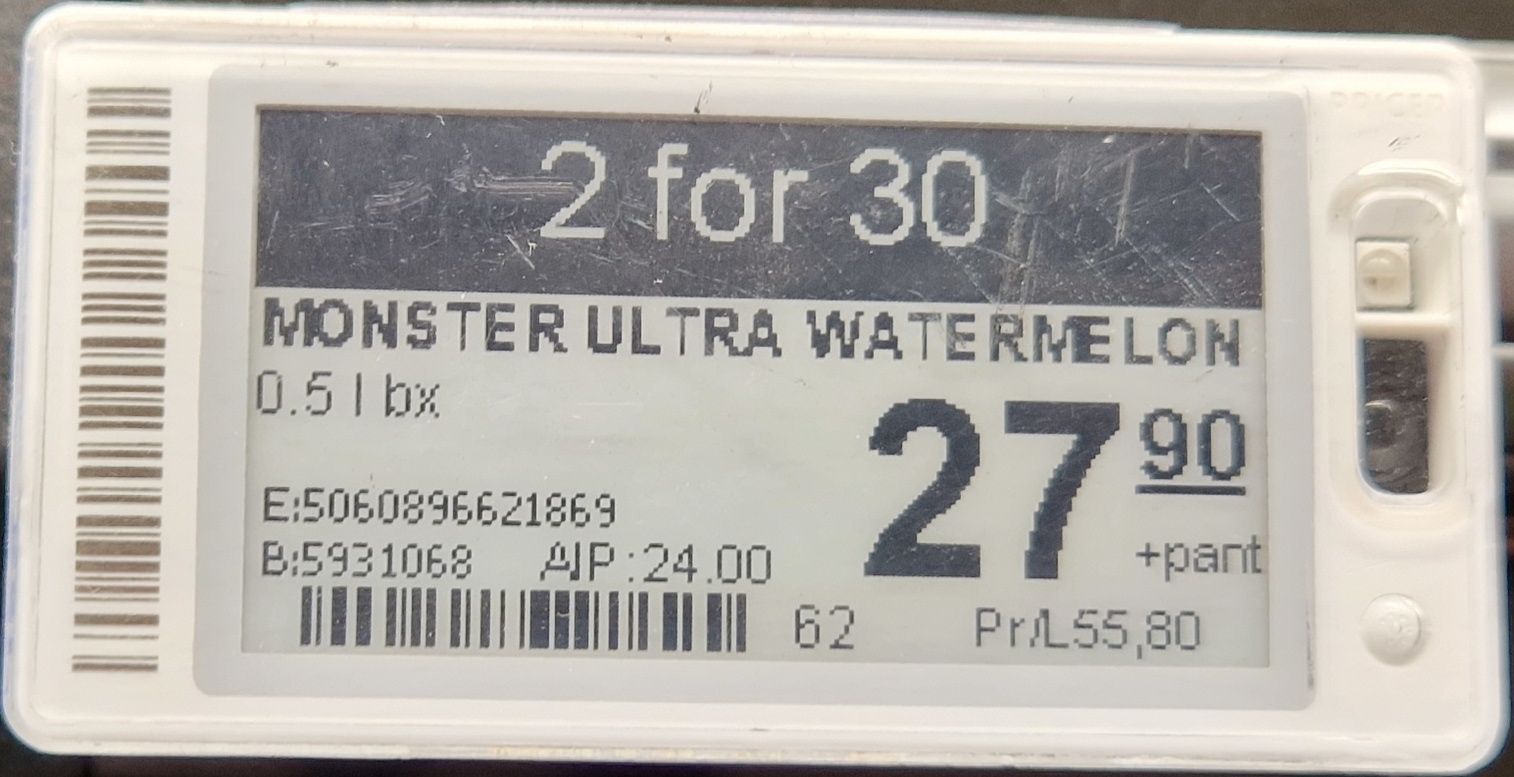

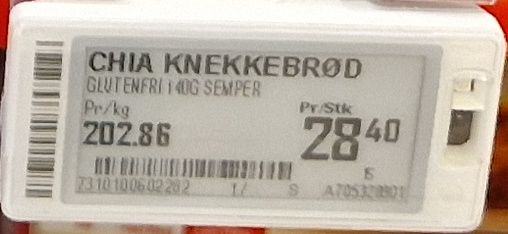

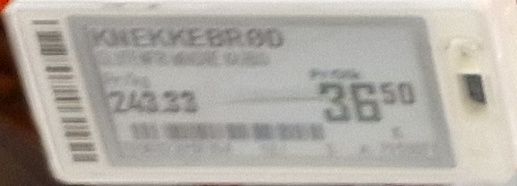

Images are often bad, and the model needs to handle this gracefully. Some examples of the cases the model will need to handle:

- Slightly unsharp to completely unreadable images

- Cropped/obscured tags

- Specular/dirt obscuring tag

- Incorrect region detections

Base model #

The base model I chose for this task was donut-base.

Donut is a encoder-decoder transformer model, with SWIN serving as the encoder and BART serving as the decoder. The base model has been pretrained on a large synthetic dataset to do OCR.

We will fine tune the model to extract semantic data from the tags.

Barcodes #

You may have noticed most of the pricetag layouts contain some form of barcode. This is sometimes a EAN-13 barcode containing the product number, sometimes a ITF containing an internal store number, sometimes something else.

We want the model to be able to read the entire tag, including barcodes.

Primer on barcodes #

1D barcodes come in various forms, each differing in data capacity, error correction levels, and encoding complexity.

Beneath the encoding schemes, 1D barcodes are represented as a linear sequence of bars of varying lengths. Data is encoded into the width of the individual black and white bars. Some barcodes use only narrow and wide bars, while others use several bar widths to encode symbols.

The figure above is a CODE-125 barcode. We can see that this format uses 4 different widths of both the black and white bars to represent the data.

So how do we tokenize this data for the model?

The obvious approach (and why it doesn't work) #

The approach that immediately comes to mind is to introduce a set of tokens, each representing a bar of a certain width.

<b1/> - black bar of width 1

<b2/> - black bar of width 2

<b3/> - black bar of width 3

<b4/> - black bar of width 4

<w1/> - white bar of width 1

<w2/> - white bar of width 2

<w3/> - white bar of width 3

<w4/> - white bar of width 4These tokens can then be assembed into a sequence, probably surrounded by some pair of <barcode>/</barcode> tokens to indicate the beginning and end of the barcode.

In reality a model trained with this tokenization scheme would not work well. When determining the width of the first token, the model would have to look across the entire barcode in order to determine the width of the first bar.

This puts a heavy computational load on the first token in the barcode, and does not give the model any ability to self correct.

If what we fundamentally care about is the relative width of bars, how can this be best represented for the model?

Relative encoding scheme #

A more effective approach is using tokens that represent the relative width of bars, contrasting each bar against its neighbors, rather than defining absolute widths.

I chose to make a single token look at a set of 3 bars, two black and the white between them. Each token uses the initial black bar as a reference, and encodes the relative widths of the succeeding white and black bars.

<b0,0/> - all 3 bars are the same width

<b1,0/> - the white bar is 1 unit wider than the reference bar

<b-1,0/> - the white bar is 1 unit narrower than the reference bar

<b0,-1/> - the second black bar is 1 unit narrower than the reference bar

+ all other relative widthsUsing this scheme, we end up with more tokens (7*7=49 vs 4*2=8), but they are much easier for the model to utilize.

Since this is a different format than the normal decoding algorightms for most barcode formats take by default, this does require a conversion to be done in code. This is overall not very complicated to do.

Dataset #

I generated a synthetic dataset of 10000 document images containing both text and barcodes to train the model to read barcodes. The dataset was generated using a modified version of synthdog, the tool the authors of donut used to train the base model.

The full dataset is uploaded here.

Fine tuning for pricetags #

As with training the model for reading barcodes, we need to decide on two main points when finetuning for our specific purpose:

- How do we tokenize the data for the model?

- What data do we train the model on?

Pricetag tokenization scheme #

I went for a XML-ish tokenization scheme, where fields are represented using distinct open and close tokens.

<pricetag>

<title>Juice</title>

</pricetag>Each of the XML tags in the text above is a separate token.

Training data #

Although generating synthetic data for pricetag reading was an option, I concluded that the return on investment would be higher with real-world data annotation due to its inherent accuracy and diversity. I believed this to be a better use of my time because:

- The main bulk of training the model to read is done on synthetic dataset, the datasets required to teach the model to read the specific pricetag layouts should not be massive.

- By using real world data we don't have to worry about subtle biases in the synthetic data, the data we train on is guaranteed to be in distribution.

I found that around 100 hand annotated samples were enough for the model to start performing reasonably well. After this point I started using the model to interactively assist me in annotating more data. After around 500 human validated samples the model was more than good enough to be deployed.

Results #

Here I have deliberately picked challenging cases to show how the model performs.

The model performs more than satisfactory in the real world.

I considered doing more rigerous benchmarking on the model, but since this is a hobby project I would rather spend my time on other parts of the project now that this model works well enough.

Deployment #

The model is mainly deployed in two different settings:

- Batch processing. I have a large backlog of store shelf images I use the model to extract data from.

- Interactive. An object detection model runs locally in real time on a mobile device, with detected tags being uploaded and processed on the server. The user receives immediate feedback on which tags were successfully read out, and the user can change image framing in real time.

For both of these I use the beam.cloud service, which I have been reasonably happy with. I have managed to get cold start down to around 20 seconds, but there is probably more room for improvement here since the model is only 600mb or so.

Future work #

There are many opportunities for improvement on the model itself. A lot of these relate to the format of the sequence the model is trained to output.

OCR from multiple images #

The nature of having an app running in real time on a mobile device, means there are often multiple captures available for a given tag. In many cases each capture contains glare or occlusions on different areas of the tag.

Read more

It should be possible to train the model to predict a special token whenever it is unsure about a part of the sequence. A special sampling strategy could then be used to decode in tandem with multiple captures.

Training the models in this regieme is probably a bit more tricky, but doable. I imagine a three step process:

- Images are captured and manually annotated. These captures should be fully readable.

- Introduce artifical imperfections (occlusion/glare/scratches), probably generate several variations for each annotated capture. Imperfections can be placed randomly.

- Decode both the capture and the augmented capture with the model we already trained. Where the two models differ is where we want to train the model to predict our new "uncertainty" token.

- Continue training the model on these augmented samples.

This should hopefully train the model to do two major things:

- Predict the uncertainty token whenever it is unsure.

- Learn to gracefully recognize when generation has reached the areas where it can contribute to generation.

Sampling strategy #

Right now I sample the model using simple greedy sampling + a per field and total generation length limit.

A lot of clever stuff can be done, especially when it comes to barcodes with error correcting mechanisms.

Conclusion #

Overall, training the model has been a large success. It performs a lot better than any off the shelf OCR solution for my very specific use case, and is relatively cheap and quick to run.